Image via CrunchBase

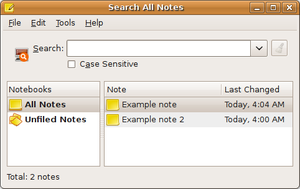

Image via CrunchBaseMentioned are identi.ca, Twitter, Friendfeed, Google Reader, LinkedIn, a blog, and Facebook. Matt needs seven services to cover all his bases. Sure, many of the services are syndicated to other services, but he checks some more (Identi.ca) and some less (Twitter).. What happens if I subscribe to the wrong service to follow Matt? Am I relegated to being a second-class social network citizen?Here is the arrangement I’ve ended up with:If you just want to hear bits and pieces about what I’m up to, you can follow me on identi.ca, Twitter or FriendFeed. My identi.ca and Twitter feeds have the same content, though I check @-replies on identi.ca more often.If you’re interested in the topics I write about in more detail, you can subscribe to my blog.If you want to follow what I’m reading online, you can subscribe to my Google Reader feed.If (and only if) we’ve worked together (i.e. we have worked cooperatively on a project, team, problem, workshop, class, etc.), then I’d like to connect with you on LinkedIn. LinkedIn also syndicates my blog and Twitter.If you know me “in real life” and want to share your Facebook content with me, you can connect with me on Facebook. I try to limit this to a manageable number of connections, and will periodically drop connections where the content is not of much interest to me so that my feed remains useful. Don’t take it personally (see the start of this post). Virtually everything I post on my Facebook account is just syndicated from other public sources above anyway. I no longer publish any personal content to Facebook due to their bizarre policies around this.

I believe that this situation frustrates the average person as much or more than privacy issues. A lot of people just don't care about privacy, are ignorant of what's being shared, are willing to make the trade-off because of a lack of alternatives, or just don't feel locked in. You can see the feature creep in Facebook, now many people's main e-mail client, as Facebook tries to be all things to all people.

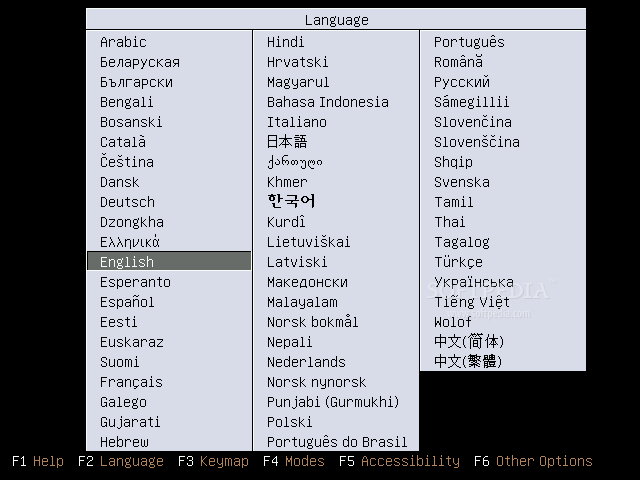

A week ago, Shashi write a wiki page called "Why build on StatusNet?" Evan Prodromou responded to the article with this dent: "GNUsocial, Diaspora, et. al.: use StatusNet to build your distributed social network. It'd be dumb to start over from scratch." While I agree with the second half of the statement (starting from scratch would certainly be dumb), is it fair to ask a developer to build on SocialNet?

I'm no crack developer, but I'm going to attempt to answer this question by Looking at as many of the Facebook features as possible and comparing them to current StatusNet features as implemented, and try to gauge how difficult adding the necessary elements would be. I'm not going to pull punches. "Let's tie a bunch of unconnected services together and we're done" is not a realistic plan for replacing Facebook (and LinkedIn, and your blog, and ...) successfully. I'll be using the Wikipedia list of Facebook features as a starting point.

- Publisher: This is the core functionality of Facebook. You post something. It appears on your wall. It appears on your friend's wall in some cases. etc. StatusNet has a similar setup, but it's feed-based network obviously does things a little differently.

Status(Net): Mostly implemented. - News Feed: This is the first page you see when you log into Facebook. Users see updates and can "Like" or comment on these updates. Photos and video posts are viewable on the same page. StatusNet's "Personal" tab is similar, but is not the page seen on login (on Identi.ca, at least). This is easily changed, but the tab lacks threaded comments and direct viewing of multimedia. There is a gallery plug-in, but threaded comments are much more difficult to do. Do they even want to?

Status(Net): Partially Implemented. - Wall: The wall is where all your FB updates go, and where people can respond. SN has your profile page, much the same, but there are, again, missing features like comments.

Status(Net): Partially Implemented. - Photo and video uploads: FB houses many people's online gallery. It handles photos and videos. They can be tagged. There are comments. All this stuff goes to your news feed. SN has nothing like this. The Gallery program has none of these abilities. This is a hard problem.

Status(Net): Ground Zero. - Notes: This is a blogging platform with tags and images. It's limited, but it's far beyond anything that SN has. The only option is to use a Drupal add-on to turn it into an SN hub. What's missing from Drupal? I don't know.

Status(Net): Ground Zero. - Gifts: I know. You're a geek. You hate gifts. You especially hate paying for gifts. Other people give me gifts all the time, though, so there must be some interest. SN doesn't have anything, but gifts seem easy to implement.

Status(Net): Nothing, but not too difficult. - Marketplace: Craigslist on FB? Sure, why not? SN is in the dark here.

Status(Net): Ground Zero. - Pokes: What's a poke? Who knows? Who cares! Still, SN would need them because I cqn guarantee the absence of them would become a big deal. Again, nothing, though not too hard, I'd guess.

Status(Net): Nothing, but not too difficult. - Status Updates: This is what SN is all about, but status updates are public. Could privacy happen on SN? I don't know.

Status(Net): Mostly there. - Events: FB is the event planner and the place to post the pictures after the event. That's really what it excels at. SN? Nowhere?

Status(Net): Ground Zero. - Networks, groups and like pages: SN has groups and is getting Twitter-like lists, but there are no networks as far as I can tell. I can "favorite" a post, but there is no way to create a page for other people to "favorite/like." Networks should be just designated groups. Likes need to be implemented in other places, and could be added pretty easily after that.

Status(Net): Mostly there. - Chat: FB chat sucks, but it exists. SN doesn't really have chat, though there are some IRC and XMPP plug-ins which can fake it. They're not private, though. Ouch. Think someone will get bitten by that one? Sure. Tack on an XMPP server to SN, and you're ready to go.

Status(Net): Nothing, but not too difficult. - Messaging: FB can be your e-mail client. It can even send mail outside the walled garden (last I chacked). SN has a private messaging feature. With federation, this would operate similarly.

Status(Net): Implemented. - Usernames: FB lets you get a page with your name, unless your name is the same as someone famous, that is. ;). SN has your profile at a nice, readable URL by default.

Status(Net): Implemented. - Platform: This is probably the biggest thing FB has brought ot the table and Farmville numbers tell you that it's pretty important. Can SN follow? OpenSocial leads the way. Unfotunately, it doesn't have that viral thing going for it.

Status(Net): Ground Zero.

What do you thin? Have I missed any Facebook features that SN needs or already has? Did I get something wrong? Do you think that SN would make a decent social network, or is micro-blogging just the wrong model for it?

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=d968d2bf-98b0-4394-a678-47cafcd1fba1)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=3104e3eb-0573-44c0-a31a-408e8c10cc64)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=f01b3f64-f917-4438-bdb7-45e746fa1124)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=60dc6b98-7ad5-492c-8a5b-4d30526a13d1)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=17c9d2ce-28c9-4c97-acf7-9f4ff6a2fca3)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=9caa6f56-e6e0-4798-9717-0742a1fd31bd)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=b25bfe13-fe98-4082-9feb-adf3ba2bf780)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=d47b8c4b-af35-42b1-b24b-87d35abee402)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=6d25806a-4124-4bb3-8778-f394f6bdd1a8)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=a0c799ec-d51a-4e75-b772-1d7151d01065)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=6acd438f-4cdb-4494-9317-766f4cc3e880)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=dadc725a-e2d8-4803-9022-654743e8525e)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=5786fcb7-8213-4879-91d4-874aa621b232)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=476c1336-f3c0-4d07-aca4-854aec2d2e48)

![Reblog this post [with Zemanta]](http://img.zemanta.com/reblog_e.png?x-id=a3e42f39-6177-4f30-9f22-3d19bcb12481)